In our role as a team that supports digital and online learning and teaching, we have been thinking a lot recently about generative AI. How we use it, how we support it, and how we talk about and describe it. In this blog post, we will share some of our thinking around this. Obviously, things are changing apace, and we want to emphasise that our thinking is also in development, always subject to fluctuation and new influences as we continue to learn.

A learning ‘journey’

Deciding how to talk about generative AI is an especially complicated area, given that many of the narratives that exist around AI come from the world of science fiction. This makes it difficult for the casual user to distinguish between what is possible, what is persuasive marketing, and what is an imaginative fiction dreamed up and shared on social media. In 2018 the Royal Society wrote a comprehensive paper illustrating this, Portrayals and perceptions of AI and why they matter, that discusses the effects this may have.

One of the primary ways humans learn and interact with the world is through metaphor, sometimes those we personally choose and sometimes those that are already embedded into our language. In higher education, for example, we may choose to talk about a learning ‘journey’, understanding that this doesn’t represent a physical movement but instead an evolution of understanding. With a new technology like generative AI, the way that the conversation is shaped around it, and the metaphors we choose to use, can have a significant impact on how people think about it and what they expect.

And while they are an important tool, metaphors can also hinder the development of a shared understanding. “…it is important to be aware of the limitations of metaphor use in an educational environment. Inappropriate uses, opaque uses, or metaphors which do not have a high degree of similarity between the two topics may create difficulties for learners or impede the educational process.” (Roe, Furze & Perkins, 2024, p4)

Given these potential pitfalls, how might we carefully use language in a way that helps colleagues to understand AI technology and its implications?

Who’s steering this thing?

An additional layer of complication in this metaphorical mess is the way that programmers and marketers have elected to use language in the outputs that AI produces, and the way that the tools are discussed in promotional materials. Here, the metaphor of AI as something that thinks, something that has knowledge, has been designed in from the ground up. When AI produces outputs that use language that sounds like a person, it is easy for us to interpret it to mean that the AI tool is something that we can trust. It seems like our question has been understood, when in reality it is a collection of statistical likelihoods that has placed the words into the output sequence. (For more about this, read On the Dangers of Stochastic Parrots: Can language models be too big? by Bender et al.)

For example, if an AI tool cannot produce an output that answers your prompt it might output text that says “I am sorry”, or that it is “happy to help” – but as something that cannot think, how can it ‘be’ anything at all?

With this in mind, our team has chosen to use language carefully and deliberately when we talk about generative AI. We work to describe AI as a tool, and intend that colleagues will understand their responsibility for any of its outputs that they use. (See our blog post, What is authorship, anyway? for more.)

Taking the metaphorical wheel

So how has our team tried to do this?

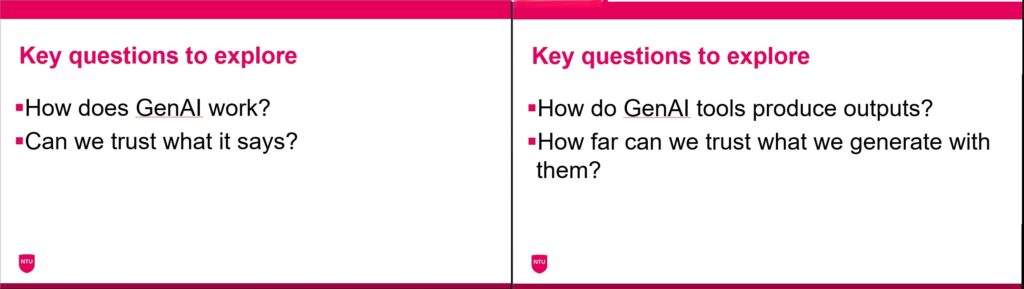

The following images show the evolution of some introductory questions for a generative AI training session we will be working through with our colleagues at Nottingham Trent University (the earlier version is on the left):

We have reworded the questions to deliberately remove any implicit reference to generative AI having agency or consciousness, and used language to focus on GenAI as a ‘tool’. There are related implications that we hope this helps to convey:

- A tool is often something you must learn to use safely

- A tool requires the user’s knowledge and understanding to create a useable output

- A tool is not responsible for what it is used to create.

These implications of the chosen metaphor are important concepts that we want to convey whenever we communicate about AI.

Likewise in the second question we are emphasising the user’s control and agency, by reiterating that the output is something we, the user, have caused generative AI to produce. Choosing not to use the word ‘says’ is our way of mitigating the humanising language.

So that’s how we’ve chosen to talk about AI:

- Using language that doesn’t humanise AI

- Using language that emphasises that AI is a tool

- Using language that keeps responsibility for outputs of AI with the user.

The road ahead

These considerations about how carefully we want to use language around generative AI are being taken forward in the work we are doing to develop a set of critical reflective prompts, designed to support conversations between students and colleagues who are thinking about the ways that they use generative AI. We promise to share more detail about these when we’re further along in our journey!

Roe, Jasper & Furze, Leon & Perkins, Mike. (2024). Funhouse Mirror or Echo Chamber? A Methodological Approach to Teaching Critical AI Literacy Through Metaphors. 10.48550/arXiv.2411.14730.

Author: Bethany Witham

Learning Technologist

Learning and Teaching Support Unit (LTSU)

School of Arts and Humanities

Nottingham Trent University